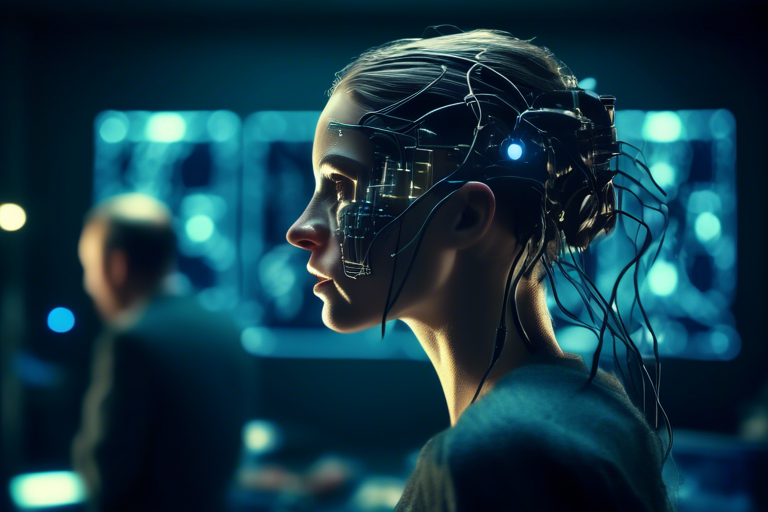

Neural Network Basics

Neural networks are a type of machine learning model inspired by the structure and function of the human brain. They consist of interconnected nodes, or neurons, that work together to process and analyze complex data.

Operations Overview

Neural networks operate by taking input data, processing it through multiple layers of neurons (known as hidden layers), and producing an output. This process involves mathematical operations and the application of activation functions to introduce non-linearity into the model.

Characteristics of Features

Features are the individual pieces of information that neural networks use to make predictions or classifications. Maximizing features involves selecting and engineering the most relevant and informative data points to improve the model's performance.

Types of Input Data

Neural networks can handle various types of input data, including numerical values, text, images, and more. The type of data being used will influence the design of the neural network and the preprocessing steps required.

Common Activation Functions

Activation functions are used to introduce non-linearities into the neural network, allowing it to capture complex patterns in the data. Common activation functions include sigmoid, tanh, ReLU, and softmax, each serving different purposes in different parts of the network.

Structure of Hidden Layers

Hidden layers are the layers of neurons between the input and output layers of a neural network. The number and size of hidden layers can vary depending on the complexity of the problem being solved, with deeper networks typically being able to learn more intricate patterns.

Functions of Output Layer

The output layer of a neural network produces the final prediction or classification based on the information processed through the hidden layers. The structure and activation function of the output layer will depend on the type of problem being addressed (e.g., regression, binary classification, multi-class classification).

Backpropagation Concept

Backpropagation is the process by which neural networks adjust their weights and biases during training to reduce the error between predicted and actual outputs. It involves calculating gradients using the chain rule of calculus and updating parameters through optimization algorithms like gradient descent.

Training Techniques

Training a neural network involves feeding it labeled data, computing loss functions to measure prediction accuracy, backpropagating errors through the network, and updating weights and biases iteratively. Techniques like mini-batch gradient descent, regularization, and early stopping are commonly used to improve training efficiency and prevent overfitting.

Classification Algorithms

Neural networks can be used for a variety of classification tasks, including image recognition, sentiment analysis, and disease diagnosis. Common classification algorithms based on neural networks include feedforward networks, convolutional neural networks (CNNs), recurrent neural networks (RNNs), and deep learning models. Each algorithm has its strengths and weaknesses, making it essential to choose the most suitable one for a particular task.